Détection d’individus atypiques en régression SIR.

Détection d’individus atypiques en régression SIR.

Abstract

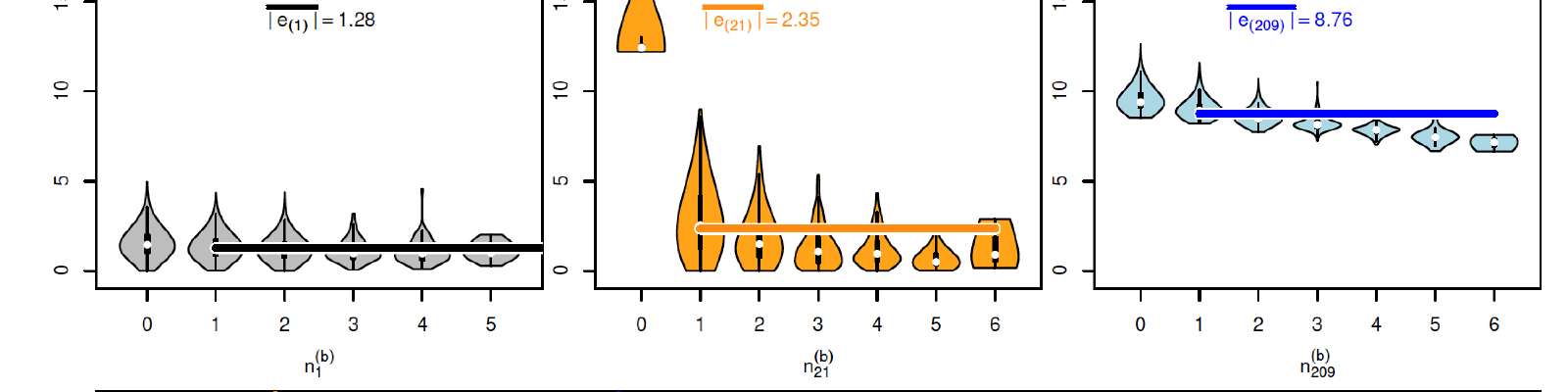

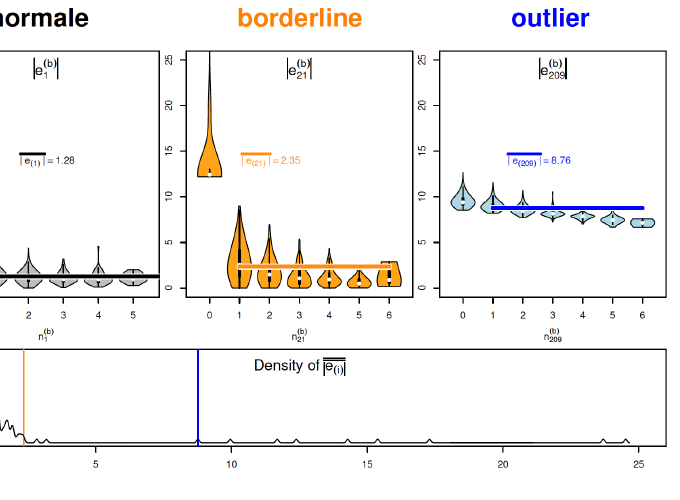

Sliced inverse regression (SIR) focuses on the relationship between a dependent variable and a -dimensional explanatory variable in a semiparametric regression model in which the link relies on an index and link function . SIR allows to estimate the direction of that forms the effective dimension reduction (EDR) space. Based on the estimated index, the link function can then be nonparametrically estimated using kernel estimator. This two-step approach is sensitive to the presence of outliers in the data. The aim of this paper is to propose computational methods to detect outliers in that kind of single-index regression model. Three outlier detection methods are proposed and their numerical behaviors are illustrated on a simulated sample. To discriminate outliers from ``normal” observations, they use IB (in-bags) or OOB (out-of-bags) prediction errors from subsampling or resampling approaches. These methods, implemented in R, are compared with each other in a simulation study. An application on a real data is also provided.